How to Start Learning Computer Graphics Programming

Ever since I opened up my Direct Messages and invited everyone to ask me computer graphics related questions on Twitter, I am very often asked the question "How can I get started with graphics programming?". Since I am getting tired of answering this same question over and over again, I will in this post compile a summary of all my advice I have regarding this question.

Advice 1: Start with Raytracing and Rasterization

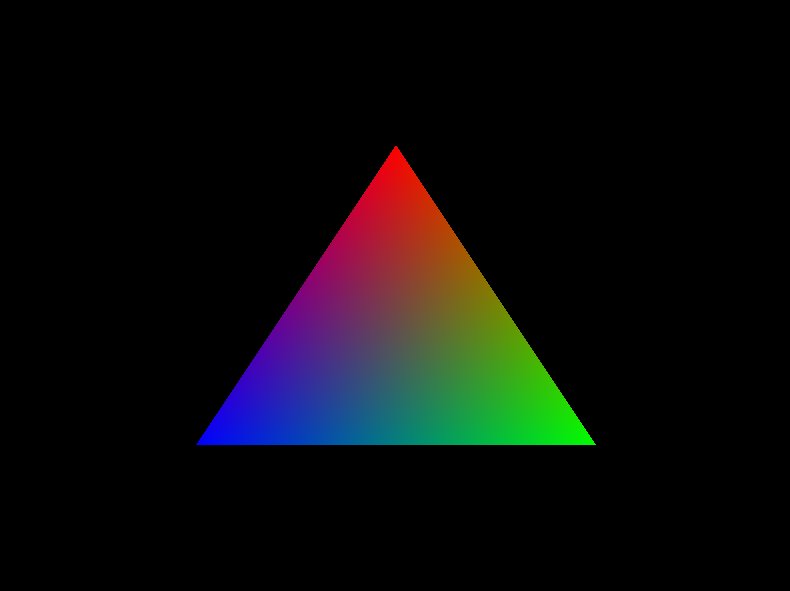

Quite a few API:s for coding against the GPU hardware have appeared over the years: Direct3D, OpenGL, Vulkan, Metal, WebGL, and so on. These API:s can be difficult to get started with, since they often require much boilerplate code, and I consider that they are not beginner friendly at all. In these API:s, even figuring out how to draw a single triangle is a massive undertaking for a complete beginner to graphics. Of course, an alternative is that we instead use a Game Engine like Unity and Unreal Engine. The game engine will be doing the tedious work of talking to the graphics API for you in this case. But I think that even a game engine is too much to learn for a complete beginner, and that time should be spend on something a bit simpler.

Instead, what I recommend for beginners, is that they write themselves either a raytracer or a software rasterizer(or both!). Put it simply, A raytracer is a program that renders 3D scenes by sending out rays from every pixel in the screen, and does a whole bunch of intersection calculations and physical lighting calculations, in order to figure out the final color of each pixel. A software rasterizer, renders 3D scenes (which in a majority of cases is just a bunch of triangle) like this: for every triangle we want to draw, we figure out which pixels on the screen that triangle covers, and then for each such pixel, we calculate how the light interacts with the point on the triangle that corresponds to the pixel. From this light interaction calculation, we obtain the final color of the pixel. Rasterization is much faster than raytracing, and it is the algorithm that modern GPU:s uses for drawing 3D scenes. And software rasterization, simply means that we are doing this rasterization on the CPU, instead of the GPU.

Both rasterization and raytracing are actually two pretty simple algorithms, and it is much easier for a beginner to implement these, than it is to figure out modern graphics API:s. Furthermore, by implementing one or both of these, the beginner will be introduced to many concepts that are fundamental to computer graphics, like dot products, cross products, transformation matrices, cameras, and so on, without having to waste time wrestling with modern graphics API:s. I believe that these frustrating graphics API:s turn off a lot of beginners from graphics, and making your first computer graphics project into a rasterizer or a raytracer is a good way of getting around this initial hurdle.

Note that one large advantage to writing a software rasterizer before learning a graphics API, is that it becomes much easier to debug things when things inevitably go wrong somewhere, since these API:s basically just provide an interface to a GPU-based rasterizer(note to pedantics: yes,this is a great simplification, since they provides access to things like computer shaders as well). Since you know how these API:s work behind the scenes, it becomes much easier to debug your code.

For writing a raytracer, I always recommend reading Peter Shirley's books. For writing a software rasterizer, see these resources: 1, 2, 3, 4.

Advice 2: Learn the necessary Math

My next advice is that you should study the math you need for computer graphics. The number of math concepts and techniques I use in my day-to-day work as a graphics programmer is surprisingly small, so this is not as much work as you might think. When you are a beginner in graphics, a field of mathematics called 'linear algebra' will be your main tool of choice. The concepts from linear algebra that you will mostly be using are listed below

- Dot Product

- Cross Product

- Spherical Coordinates

- Transformation Matrix(hint: you will mostly be using nothing but 4x4 matrices as a graphics programmer, so do not spend any time on studying large matrices)

- Rotation Matrix, Scaling Matrix, Translation Matrix, Homogeneous Coordinates, Quaternions

- Orthonormal Basis Matrix

- Intersection calculations. Mostly things like calculating the intersection between a ray and a sphere, or a plane, or a triangle.

- Column-major order and row-major order is a detail that trips up many beginners in my experience, so do make sure you fully understand this. Read this article for a good explanation.

- How to model a camera, with the view matrix and perspective transformation matrix. This is something that a lot of beginners struggle with, so this is a topic that should be studied carefully and in depth. For the perspective matrix, see this tutorial. For the view matrix, see this.

From the beginner to intermediate level, you will mostly not encounter any other math than the above. Once you get into topics like physically based shading, a field of mathematics called 'calculus' also becomes useful, but that is a story for another day :-).

I will list some resources for learning linear algebra. A good online mathbook on the topic is immersive linear algebra. A good video series on the topic that allows you to visualize many concepts is Essence of linear algebra. Also, this OpenGL tutorial has useful explanations of elementary, yet useful linear algebra concepts. Another resource is The Graphics Codex.

Advice 3: Debugging tips when Drawing your First triangle

Once you have written a raytracer or rasterizer, you will feel more confident in learning a graphics API. The hello world of learning a graphics API is to simply draw a triangle on the screen. It can actually be surprisingly difficult to draw your first triangle, since usually a large amount of boilerplate is necessary, and debugging graphics code tends to be difficult for beginners. In case you have problems with drawing your first triangle, and is getting a black screen instead of a triangle, I will list some debugging advice below. It is a summary of the steps I usually go through when I run into the same issue.

- Usually, the issue lies in the projection and view matrices, since they are easy to get wrong. In the vertex shader, on every vertex you apply first the model matrix, then the view matrix, and then the projection matrix, and then finally do the perspective divide(although this last divide is handled behind the scenes usually, and not something you do explicitly). Try doing this process by hand, to sanity check your matrices. If you expect a vertex to be visible, then after the perspective divide the vertex will be in normalized device coordinates, and x should be in range [-1,+1], y in range [-1,+1], and z in range [-1,+1] if OpenGL(z in range [0,1] for Direct3D). If the coordinate values are not in this range, then a vertex you expected to be visible is not visible(since everything outside this range is clipped by the hardware), and something is likely wrong with your matrices.

- Did you remember to clear the depth buffer to sensible values? For instance, if you use a depth comparison function of D3DCMP_LESS(Direct3D), and then clear the depth buffer to 0, then nothing will ever drawn, because nothing will ever pass the depth test! To sum up, make sure that you fully understand the depth test, and that you configure sensible depth testing settings.

- Make sure you correctly upload your matrices(like the view and projection matrices) to the GPU. It is not difficult to accidentally not upload that data to the GPU. You can verify the uploaded matrices in a GPU debugger like RenderDoc. Similarly, make sure that you upload all your vertex data correctly. By mistake uploading only a part of your vertex data is a common mistake due to miscalculations.

- Backface culling is another detail that trips up a lot of beginners. In OpenGL for instance, backfacing triangles are all culled by default, and if you made a backfacing triangle and render it, it will not be rendered at all. My recommendation is to temporarily disable backface culling when you are trying to render your first triangle.

- Check all error codes returned by the functions of the graphics API, because they might contain useful information. If your API has access to some kind of debugging layer, like Vulkan, you should enable it.

- For doing any kind of graphics debugging, I strongly recommend learning some kind of GPU debugging tool, like RenderDoc or Nsight. These tools provide you with an overview of the current state of the GPU for every step of your graphics application. They allow you to easily see whether you have correctly uploaded your matrices, inspect your depth buffer and depth comparison settings, backface culling settings, and so on. All state that you can set in the graphics API, can easily be inspected in such programs. Another feature of RenderDoc that I really like and use a lot, is that it allows you to step through the fragment shader of a pixel(This feature appears to be exclusive to Direct3D at the time of writing though). You simply click on a pixel, and RenderDoc allows you to step through the fragment shader that was evaluated and gave the pixel its current color value. This feature is shown in the gif below. I click on an orange pixel, and then step through the fragment shader calculations that caused the pixel to be assigned this color. Check out Baldur Karlsson's youtube channel, if you want to see more RenderDoc features.

Advice 4: Good Projects for Beginners

In my view, the best way to become good at graphics, is to work on implementing various rendering techniques by yourself. I will below give a list of suggestions of projects that a beginner can implement and learn from.

- Make a sphere mesh using spherical coordinates, and render it.

- Implement shader for simple diffuse and specular shading.

- Directional Lights, point lights, and spot lights

- Heightmap Rendering

- Write a simple parser for a simple mesh format such as Wavefront .obj, import it into your program and render it. In particular, try and import and render meshes with textures.

- Implement a simple minecraft renderer. It is surprisingly simple to render minecraft-like worlds, and it is also very learningful.

- Render reflections using cubemaps

- Shadow rendering using shadow maps.

- Implement view frustum culling. This is a simple, yet very practical optimization technique.

- Implement rendering of particle systems

- Learn how to implement Gamma Correction.

- Implement normal mapping

- Learn how to render lots of meshes efficiently with instanced rendering

- Animate meshes with mesh skinning.

And here are also some more advanced techniques:

- Various post-processing effects. Like Bloom(using Gaussian blur), ambient occlusion with SSAO, anti-aliasing with FXAA.

- Implement deferred shading, a technique useful for rendering many light sources.

And this concludes the article. So that was all the advice I had offer on this topic.